- cross-posted to:

- technology@beehaw.org

- cross-posted to:

- technology@beehaw.org

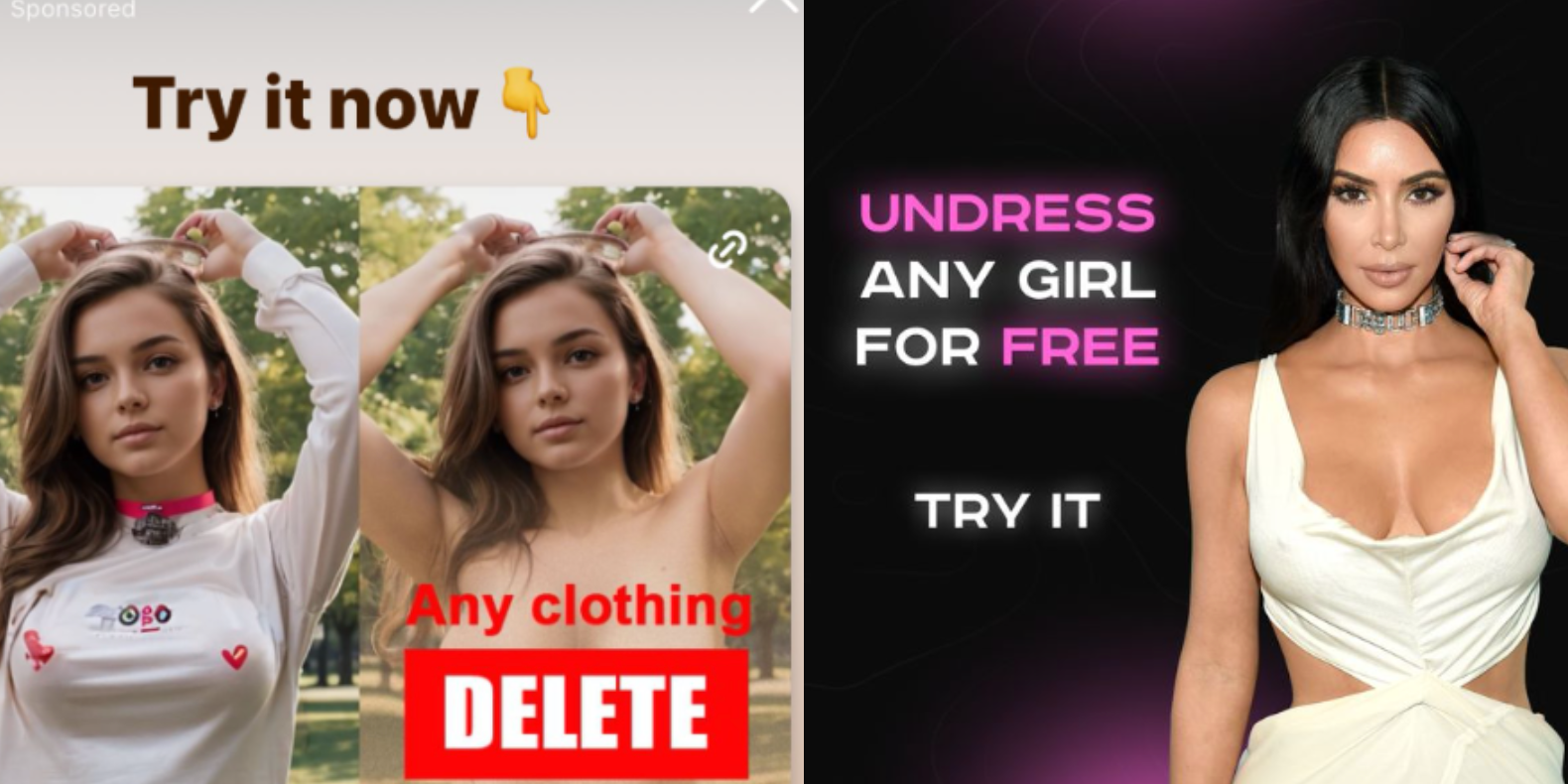

Instagram is profiting from several ads that invite people to create nonconsensual nude images with AI image generation apps, once again showing that some of the most harmful applications of AI tools are not hidden on the dark corners of the internet, but are actively promoted to users by social media companies unable or unwilling to enforce their policies about who can buy ads on their platforms.

While parent company Meta’s Ad Library, which archives ads on its platforms, who paid for them, and where and when they were posted, shows that the company has taken down several of these ads previously, many ads that explicitly invited users to create nudes and some ad buyers were up until I reached out to Meta for comment. Some of these ads were for the best known nonconsensual “undress” or “nudify” services on the internet.

It remains fascinating to me how these apps are being responded to in society. I’d assume part of the point of seeing someone naked is to know what their bits look like, while these just extrapolate with averages (and likely, averages of glamor models). So we still dont know what these people actually look like naked.

And yet, people are still scorned and offended as if they were.

Technology is breaking our society, albeit in place where our culture was vulnerable to being broken.

How dare that other person i don’t know and will never meet gain sexual stimulation!

My body is not inherently for your sexual simulation. Downloading my picture does not give you the right to turn it in to porn.

Did you miss what this post is about? In this scenario it’s literally not your body.

There is nothing stopping anyone from using it on my body. Seriously, get a fucking grip.

Regardless of what one might think should happen or expect to happen, the actual psychological effect is harmful to the victim. It’s like if you walked up to someone and said “I’m imagining you naked” that’s still harassment and off-putting to the person, but the image apps have been shown to have much much more severe effects.

It’s like the demonstration where they get someone to feel like a rubber hand is theirs, then hit it with a hammer. It’s still a negative sensation even if it’s not a strictly logical one.

I think half the people who are offended don’t get this.

The other half think that it’s enough to cause hate.

Both arguments rely on enough people being stupid.

I suspect it’s more affecting for younger people who don’t really think about the fact that in reality, no one has seen them naked. Probably traumatizing for them and logic doesn’t really apply in this situation.

Wtf are you even talking about? People should have the right to control if they are “approximated” as nude. You can wax poetic how it’s not nessecarily correct but that’s because you are ignoring the woman who did not consent to the process. Like, if I posted a nude then that’s on the internet forever. But now, any picture at all can be made nude and posted to the internet forever. You’re entirely removing consent from the equation you ass.

Totally get your frustration, but people have been imagining, drawing, and photoshopping people naked since forever. To me the problem is if they try and pass it off as real. If someone can draw photorealistic pieces and drew someone naked, we wouldn’t have the same reaction, right?

It takes years of pratice to draw photorealism, and days if not weeks to draw a particular piece. Which is absolutely not the same to any jackass with an net connection and 5 minutes to create a equally/more realistic version.

It’s really upsetting that this argument keeps getting brought up. Because while guys are being philosophical about how it’s therotically the same thing, women are experiencing real world harm and harassment from these services. Women get fired for having nudes, girls are being blackmailed and bullied with this shit.

But since it’s theoretically always been possible somehow churning through any woman you find on Instagram isn’t an issue.

Do you? Since you aren’t threatened by this, yet another way for women to be harassed is just a fun little thought experiment.

Well that’s exactly the point from my perspective. It’s really shitty here in the stage of technology where people are falling victim to this. So I really understand people’s knee jerk reaction to throw on the brakes. But then we’ll stay here where women are being harassed and bullied with this kind of technology. The only paths forward, theoretically, are to remove it all together or to make it ubiquitous background noise. Removing it all together, in my opinion, is practically impossible.

So my point is that a picture from an unverified source can never be taken as truth. But we’re in a weird place technologically, where unfortunately it is. I think we’re finally reaching a point where we can break free of that. If someone sends me a nude with my face on it like, “Is this you?!!”. I’ll send them one back with their face like, “Is tHiS YoU?!??!”.

We’ll be in a place where we as a society cannot function taking everything we see on the internet as truth. Not only does this potentially solve the AI nude problem, It can solve the actual nude leaks / revenge porn, other forms of cyberbullying, and mass distribution of misinformation as a whole. The internet hasn’t been a reliable source of information since its inception. The problem is, up until now, its been just plausible enough that the gullible fall into believing it.

I think the offense is in the use of their facial likeness far more than their body.

If you took a naked super-sized barbie doll and plastered Taylor Swift’s face on it, then presented it to an audience for the purpose of jerking off, the argument “that’s not what Taylor’s tits look like!” wouldn’t save you.

Unregulated advertisement combined with a clickbait model for online marketing is fueling this deluge of creepy shit. This isn’t simply a “Computers Evil!” situation. Its much more that a handful of bad actors are running Silicon Valley into the ground.

Not so much computers evil! as just acknowledging there will always be malicious actors who will find clever ways to use technology to cause harm. And yes, there’s a gathering of folk on 4Chan/b who nudify (denudify?) submitted pictures, usually of people they know, which, thanks to the process, puts them out on the internet. So this is already a problem.

Think of Murphy’s Law as it applies to product stress testing. Eventually, some customer is going to come in having broke the part you thought couldn’t be broken. Also, our vast capitalist society is fueled by people figuring out exploits in the system that haven’t been patched or criminalized (see the subprime mortgage crisis of 2008). So we have people actively looking to utilize technology in weird ways to monetize it. That folds neatly like paired gears into looking at how tech can cause harm.

As for people’s faces, one of the problems of facial recognition as a security tool (say when used by law enforcement to track perps) is the high number of false positives. It turns out we look a whole lot like each other. Though your doppleganger may be in another state and ten inches taller / shorter. In fact, an old (legal!) way of getting explicit shots of celebrities from the late 20th century was to find a look-alike and get them to pose for a song.

As for famous people, fake nudes have been a thing for a while, courtesy of Photoshop or some other digital photo-editing set combined with vast libraries of people. Deepfakes have been around since the late 2010s. So even if generative AI wasn’t there (which is still not great for video in motion) there are resources for fabricating content, either explicit or evidence of high crimes and misdemeanors.

This is why we are terrified of AI getting out of hand, not because our experts don’t know what they’re doing, but because the companies are very motivated to be the first to get it done, and that means making the kinds of mistakes that cause pipeline leakage on sacred Potawatomi tribal land.

I mean, I’m increasingly of the opinion that AI is smoke and mirrors. It doesn’t work and it isn’t going to cause some kind of Great Replacement any more than a 1970s Automat could eliminate the restaurant industry.

Its less the computers themselves and more the fear surrounding them that seem to keep people in line.

The current presumption that generative AI will replace workers is smoke and mirrors, though the response by upper management does show the degree to which they would love to replace their human workforce with machines, or replace their skilled workforce with menial laborers doing simpler (though more tedious) tasks.

If this is regarded as them tipping their hands, we might get regulations that serve the workers of those industries. If we’re lucky.

In the meantime, the pursuit of AGI is ongoing, and the LLMs and generative AI projects serve to show some of the tools we have.

It’s not even that we’ll necessarily know when it happens. It’s not like we can detect consciousness (or are even sure what consciousness / self awareness / sentience is). At some point, if we’re not careful, we’ll make a machine that can deceive and outthink its developers and has the capacity of hostility and aggression.

There’s also the scenario (suggested by Randall Munroe) that some ambitious oligarch or plutocrat gains control of a system that can manage an army of autonomous killer robots. Normally such people have to contend with a principal cabinet of people who don’t always agree with them. (Hitler and Stalin both had to argue with their generals.) An AI can proceed with a plan undisturbed by its inhumane implications.

I can see how increased integration and automation of various systems consolidates power in fewer and fewer hands. For instance, the ability of Columbia administrators to rapidly identify and deactivate student ID cards and lock hundreds of protesters out of their dorms with the flip of a switch was really eye-opening. That would have been far more difficult to do 20 years ago, when I was in school.

But that’s not an AGI issue. That’s a “everyone’s ability to interact with their environment now requires authentication via a central data hub” issue. And its illusionary. Yes, you’re electronically locked out of your dorm, but it doesn’t take a lot of savvy to pop through a door that’s been propped open with a brick by a friend.

I think this fear heavily underweights how much human labor goes into building, maintaining, and repairing autonomous killer robots. The idea that a singular megalomaniac could command an entire complex system - hell, that the commander could even comprehend the system they intended to hijack - presumes a kind of Evil Genius Leader that never seems to show up IRL.

Meanwhile, there’s no shortage of bloodthirsty savages running around Ukraine, Gaza, and Sudan, butchering civilians and blowing up homes with sadistic glee. You don’t need a computer to demonstrate inhumanity towards other people. If anything, its our human-ness that makes this kind of senseless violence possible. Only deep ethnic animus gives you the impulse to diligently march around butchering pregnant women and toddlers, in a region that’s gripped by famine and caught in a deadly heat wave.

Would that all the killing machines were run by some giant calculator, rather than a motley assortment of sickos and freaks who consider sadism a fringe benefit of the occupation.